Small Language Models (SLM) - An Introduction

Small language models typically refer to language models that have fewer parameters compared to larger models. Language models like GPT (Generative Pre-trained Transformer) come in various sizes, ranging from small to extra-large, with millions to billions of parameters.

These models might not have the same level of performance or generalization capabilities as larger models, but they can still be useful for tasks like text generation, classification, summarization, and more.

Key challenges of Large Language Models

1. Require hefty computational resources and energy to run, which can be expensive for smaller organizations.

2. Risk of algorithmic bias due to insufficiently diverse datasets, leading to inaccurate outputs.

3. Possibility of generating "hallucination" outputs.

Advantages of Small Language Models

1. Efficiency:

Small language models typically require fewer computational resources (such as memory and processing power) compared to larger models. This makes them more practical for deployment on devices with limited resources, such as mobile phones or embedded systems.

2. Speed:

With fewer parameters to process, small language models often have faster inference times. This can be crucial in applications where real-time or low-latency responses are required, such as chatbots or voice assistants.

3. Cost-effectiveness:

Training and fine-tuning smaller models generally require less data and computational resources, which can result in lower costs, especially for organizations with budget constraints.

4. Domain-specific tasks:

For certain domain-specific tasks or specialized applications, a smaller model trained on relevant data might be more effective and efficient than a larger, more generalized model. Small models can be fine-tuned on domain-specific data to achieve better performance for specific tasks.

5. Privacy and security:

In some cases, smaller models might be preferred for privacy and security reasons, as they tend to have fewer parameters and therefore capture less sensitive information from the training data. This can be important in applications where data privacy is a concern.

6. Ease of deployment:

Smaller models are often easier to deploy and maintain compared to larger models, especially in resource-constrained environments. They require less storage space and can be more easily integrated into existing software systems.

7. Exploration and experimentation:

Small language models can serve as a starting point for exploring new ideas and experimenting with different architectures, hyperparameters, and training techniques. They provide a lower barrier to entry for researchers and developers looking to innovate in the field of natural language processing.

Working Example using Python Library

We will use Python’s library transformers and pytorch for this example. We will use distilgpt2 which is a DistilGPT2 (short for Distilled-GPT2) is an English-language model pre-trained with the supervision of the smallest version of Generative Pre-trained Transformer 2 (GPT-2).

You can read more: https://huggingface.co/distilbert/distilgpt2

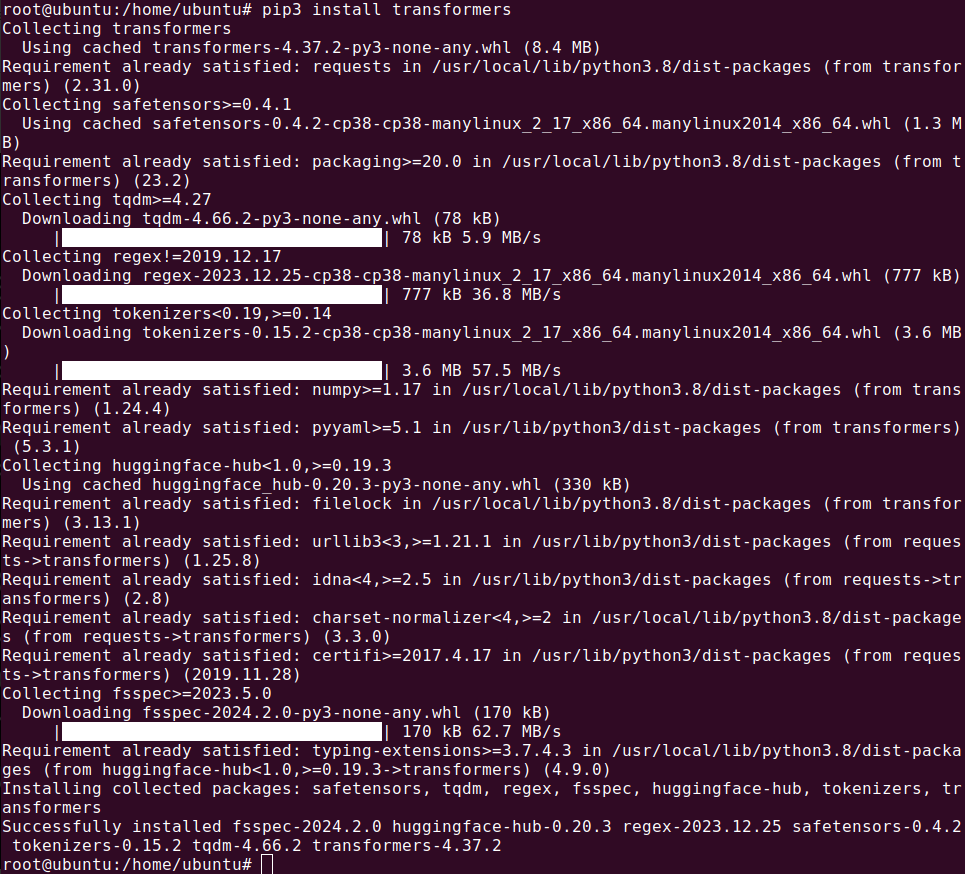

1. Install the required Python transformers

pip3 install transformers

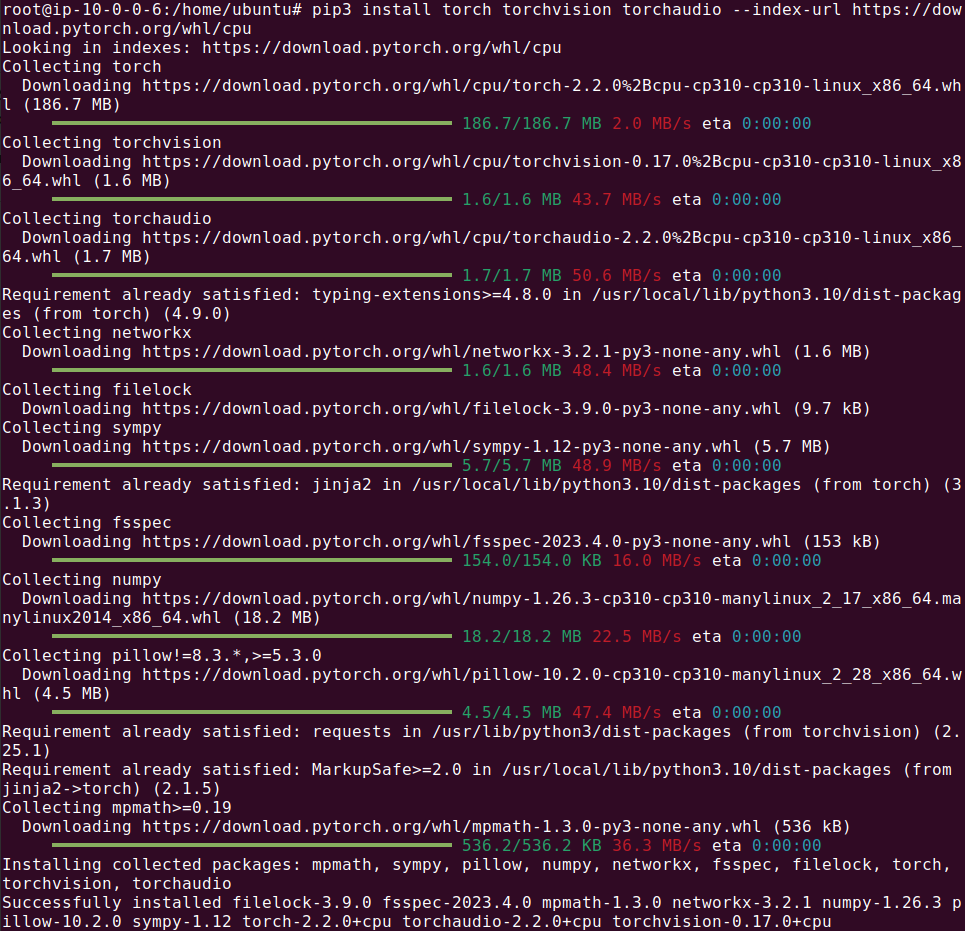

2. Install the Python torch library

For CPU based system:

pip3 install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cpu

For GPU based system:

pip3 install torch torchvision torchaudio

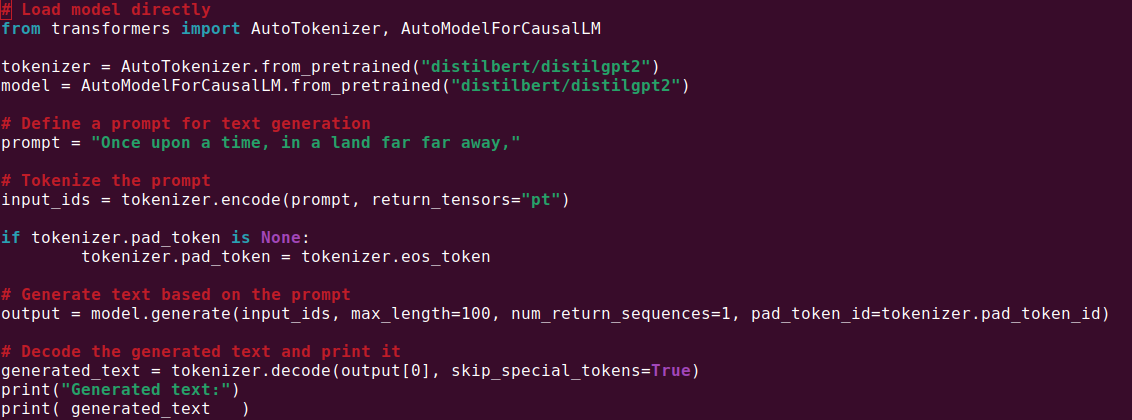

3. Using your favorite text editor add the following code:

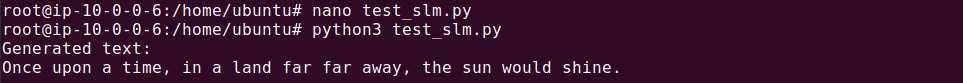

4. Execute the code as shown in the following screenshot:

Practical Scenarios

1. Small Language Model (SLM):

A customer support chatbot tailored for a particular sector, like banking or insurance, may employ a compact language model refined with sector-specific information. This enables the chatbot to comprehend and provide precise responses to customer inquiries within its designated field.

2. Large Language Model (LLM):

Organizations are employing OpenAI's Chat GPT as an AI-driven virtual aide capable of executing diverse tasks, addressing intricate inquiries, coding, and even seamlessly integrating into other software applications as answering agents.

Conclusion

Considering the aforementioned comparisons and parallels, the decision between opting for a small or large language model fundamentally hinges on the requirements, inclinations, and financial considerations of the entity involved. With the ongoing evolution of AI tools, the market may increasingly gravitate towards small language models due to the growing demand for enhanced computing capabilities and the imperative to foster sustainable technological solutions.

About FAMRO Software Development Services

At FAMRO-LLC, we provide complete software development and software architecture services that assist our clients in achieving successful projects. Our team of skilled developers and architects has a demonstrated history of delivering superior quality code and standardized documentation. We work collaboratively with our clients to establish project goals, scope, and requirements, and then create a comprehensive plan that outlines all the essential steps and milestones.

Whether our clients require assistance for a single project or ongoing software development and architecture services, we are available to support them in reaching their objectives and driving business success.

Please don't hesitate to contact us for a free initial consultation.