Using Python for AI and Large Language Models (LLMs) is becoming a great option for developers exploring this field. Libraries like Langchain and LiteLLM are strong tools available in Python that make working with LLMs easier, even for beginners. These libraries help developers navigate the complexities of LLMs efficiently.

Even though Python might seem challenging to new developers, starting with LLMs is actually straightforward, requiring only a few simple steps. Python AI makes it easier to build, train, and optimize language models. It offers support for common machine learning tasks like handling large datasets, creating algorithms, visualizing results, and organizing projects over time.

Another benefit is Python’s clear and readable syntax, which makes it easy to learn and troubleshoot. It’s beginner-friendly but can also handle more advanced applications in LLMs.

In the following sections, let’s review some key libraries at a higher level. We will continue to increase the complexity level in upcoming blog posts.

Section 1: What are Large Language Models (LLMs)?

Large Language Models (LLMs) continue to revolutionize the field of Artificial Intelligence with their versatility and comprehensive language understanding abilities. With their capacity to interpret, create, and translate vast amounts of text in multiple languages, they represent a significant development in AI technology. Training these models involves the extensive usage of diverse internet texts that further contributes to their robustness.

These AI models play a crucial role beyond the realm of language processing and have been effectively used in other noteworthy applications. One such area where they have proved their worth is in code completion tasks. During programming, LLMs can be used to predict and fill in the next part of the code, thereby speeding up the development process and reducing the margin for errors. This application of LLM-based code suggestion and completion has won significant appreciation in the world of software development.

Moreover, LLMs can also be utilized in document review processing and knowledge extraction. They can answer questions about documents with surprising precision, demonstrating their applicability in various tasks related to information extraction and management, vital to many industries today.

Their interaction with Python AI, a highly popular programming language owing to its simplicity and extensive range of libraries, accomplishes incredible synergy. Python’s straightforward syntax and vast array of libraries make it an ideal language for operations involving these AI models. By leveraging Python’s powerful tools, LLMs can be programmed to perform complex tasks with outstanding efficiency, thus paving the way for the future of AI.

Section 2: What are two key Python LLM libraries?

Several libraries exist for Artificial Intelligence (AI) in Python for LLM, the Langchain and LiteLLM libraries emerge as superior options.

Langchain (visit Langchain Home page) has been constructed explicitly with the operation of linguistic chains within LLMs in mind. This Python library equips you with the necessary toolkit for the successful creation, discontinuation, and restructuring of these linguistic chains. These attributes make Langchain a valuable resource in handling intricate details of language structure and transformation in AI programming with Python.

On the other hand, LiteLLM (visit LiteLLM's Home Page) positions itself as a more streamlined solution for managing language model tasks. Its lightweight nature makes it especially user-friendly for the newcomers to Python AI Programming. It offers functionalities that are intuitive and straightforward, hence making the learning curve relatively less steep for beginners. In essence, while Langchain caters to more advanced operations within the LLM scope, LiteLLM stands as a practical choice for novices looking to get accustomed to these tasks without being overwhelming.

Section 3: What are two key Python LLM libraries?

Several libraries exist for Artificial Intelligence (AI) in Python for LLM, the Langchain and LiteLLM libraries emerge as superior options.

Langchain (visit Langchain Home page) has been constructed explicitly with the operation of linguistic chains within LLMs in mind. This Python library equips you with the necessary toolkit for the successful creation, discontinuation, and restructuring of these linguistic chains. These attributes make Langchain a valuable resource in handling intricate details of language structure and transformation in AI programming with Python.

Section 4: A step by step tutorial of using Python LiteLLM Library

Starting your journey with a Python LLM library begins with installing the preferred library. You can easily do this through the terminal by running:

pip install litellm openai

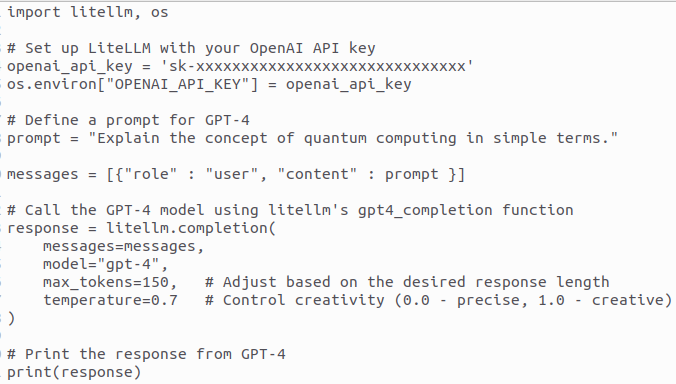

Simple code that illustrates LiteLLM library:

Code Explanation

1. Module imports:

import litellm, os

The litellm provides a mechanism to interact with models like GPT-4. It provides simplified methods for calling OpenAI's API. The os module is used for accessing the environment variables

2. Setting OpenAI Key:

openai_api_key = 'sk-XXXXXXXXXXXXXXXXXXXXXXXXXXXXXX'

The above code sets the open_api_key in the code. Note this is only for demostration purposes. You should export the key on the commandline or use env files handling with secrets or credentials.

We can now set the environment variable OPENAI_API_KEY using the following code:

os.environ["OPENAI_API_KEY"] = openai_api_key

3. Setting OpenAI Key:

openai_api_key = 'sk-XXXXXXXXXXXXXXXXXXXXXXXXXXXXXX'

The above code sets the open_api_key in the code. Note this is only for demostration purposes. You should export the key on the commandline or use env files handling with secrets or credentials.

4. Adding Prompt:

prompt = "Explain the concept of quantum computing in simple terms."

A Prompt is a string-based instructions to the AI Engine (GPT-4 model of OpenAI provider, in this case). Here our prompt requests AI Model to explain Quantum Computing in simpler terms.

5. Messaging Format:

messages = [{"role" : "user", "content" : prompt }]

Each model requires messages / prompts to be forwarded in a particular manner. ChatGPT (GPT-4) model requires list of dictionaries containing the instructions along with the associated roles (system, user, assistant).

In above example, since a user (or a human) is inquiring about the definition of definition of Quantum Computing, hence the role would be user.

6. Accessing the AI Model:

response = litellm.completion (

messages=messages,

model="gpt-4",

max_tokens=150,

temperature=0.7

)

The litellm.completion function is called to interact with GPT-4. The parameters passed are:

messages=messages: The list of messages, where the user prompt is included.

model="gpt-4": Specifies that GPT-4 should be used.

max_tokens=150: Limits the length of the response to 150 tokens.

temperature=0.7: Controls the randomness/creativity of the model's responses. A higher temperature leads to more creative outputs, while a lower temperature makes the output more focused and deterministic.

You can find complete for this example at: Blog 2 - Code Samples. Please review the code file called: litellm-example.py

Section 5: A step by step tutorial of using Python Langchain Library

Starting your journey with a Python LLM library begins with installing the Langchain library. You can easily do this through the terminal by running:

pip install langchain openai

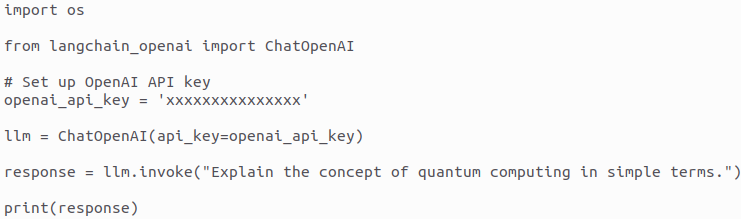

Simple code that illustrates working of Langchain library:

Code Explanation

1. Module imports:

import os

from langchain_openai import ChatOpenAI

The langchain_openai.ChatOpenAI this module imports the ChatOpenAI class from the langchain_openai module, which allows interaction with OpenAI's chat models through LangChain. The os module is used for accessing the environment variables

2. Setting OpenAI Key:

openai_api_key = 'sk-XXXXXXXXXXXXXXXXXXXXXXXXXXXXXX'

The above code sets the open_api_key in the code. Note this is only for demostration purposes. You should export the key on the commandline or use env files handling with secrets or credentials.

3. Initializing the ChatOpenAI class:

The following line initializes the ChatOpenAI class with the openai_api_key created above.

llm = ChatOpenAI(api_key=openai_api_key).

4. Invoking the API:

The invoke method is called on the llm object with the prompt "Explain the concept of quantum computing in simple terms.".

This sends the request to the GPT model and receives a response based on the input prompt..

response = llm.invoke("Explain the concept of quantum computing in simple terms.").

5. Printing the Response:

Finally, we just simply print the response received in the above step.

print(response).

You can find complete for this example at: Blog 2 - Code Samples. Please review the code file called: langchain-example.py

How FAMRO can help your in your LLM Project?

At FAMRO LLC, we specialize in delivering custom AI and Large Language Model (LLM) solutions tailored to meet the unique needs of your business. Our team leverages powerful tools like Langchain and LiteLLM to simplify the complexities of LLM development, making it easier for companies to explore and harness the power of AI.

Whether you're looking to build, train, or optimize sophisticated language models, FAMRO's AI services provide the expertise needed to manage large datasets, create efficient algorithms, and visualize results with ease. Python’s versatility and readable syntax make it the ideal platform for both beginner-friendly and advanced AI applications, and our team ensures seamless integration into your infrastructure.

From initial concept to full-scale deployment, we guide businesses through every stage of their AI journey, offering cloud-native solutions designed for scalability and efficiency. Explore our custom AI services and discover how FAMRO can help you unlock the full potential of AI-driven innovation. Check our Services Page to discover, how we can make your next AI project a BIG success.

Please don't hesitate to Contact us for free initial consultation.