Section 2: Step by Step Instructions for AWS Lambda for resizing images upon upload to S3

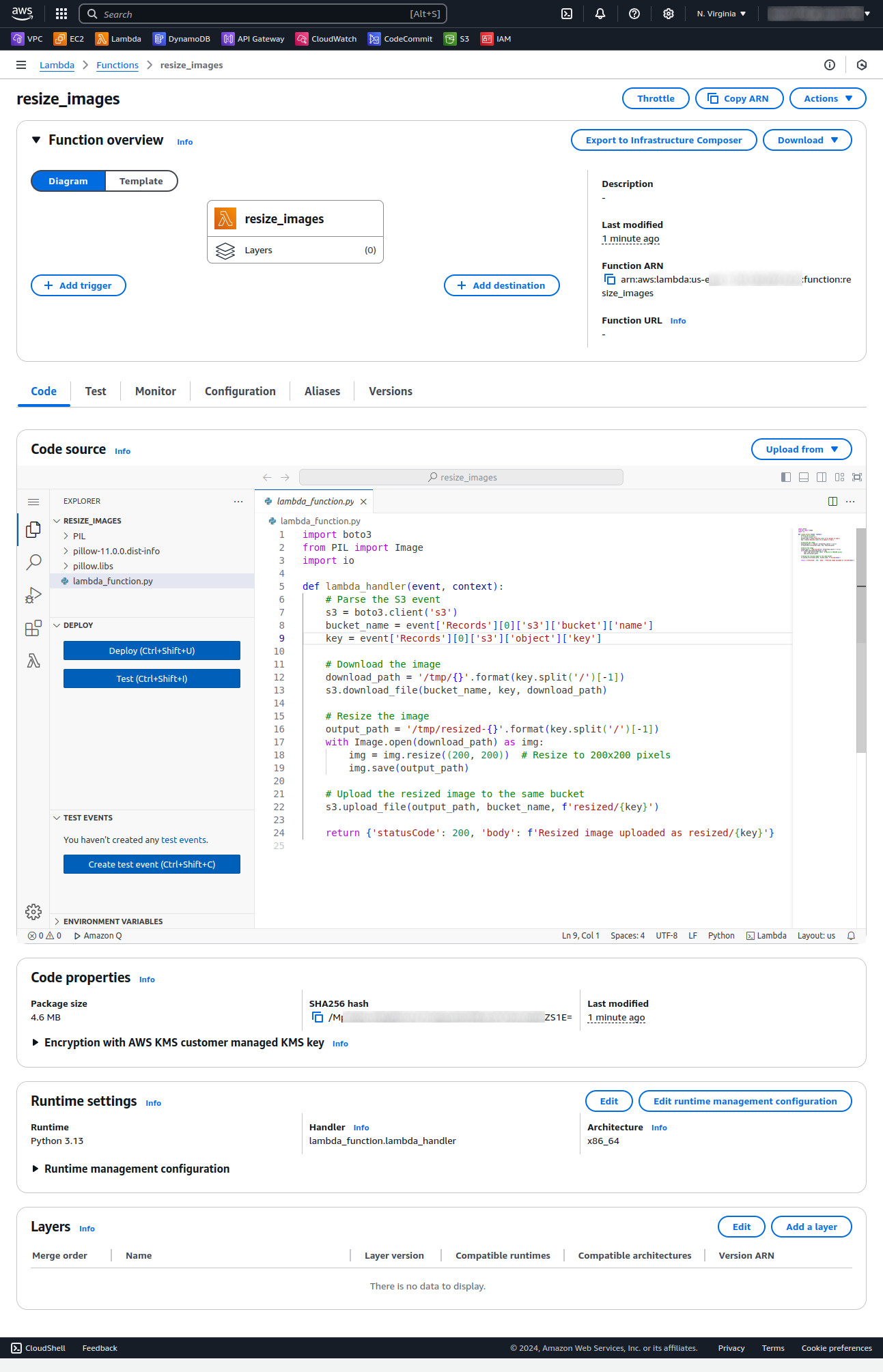

Write the Lambda Function

1. Open a code editor and create a file named lambda_function.py.

2. Add the following code to resize images using the Pillow library:

import boto3

from PIL import Image

import io

def lambda_handler(event, context):

# Parse the S3 event

s3 = boto3.client('s3')

bucket_name = event['Records'][0]['s3']['bucket']['name']

key = event['Records'][0]['s3']['object']['key']

# Download the image

download_path = '/tmp/{}'.format(key.split('/')[-1])

s3.download_file(bucket_name, key, download_path)

# Resize the image

output_path = '/tmp/resized-{}'.format(key.split('/')[-1])

with Image.open(download_path) as img:

img = img.resize((200, 200)) # Resize to 200x200 pixels

img.save(output_path)

# Upload the resized image to the same bucket

s3.upload_file(output_path, bucket_name, f'resized/{key}')

return {'statusCode': 200, 'body': f'Resized image uploaded as resized/{key}'}

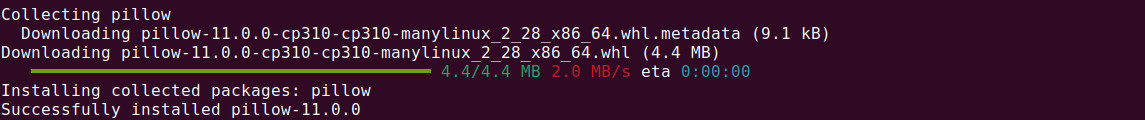

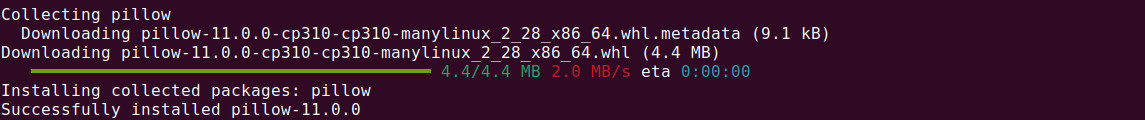

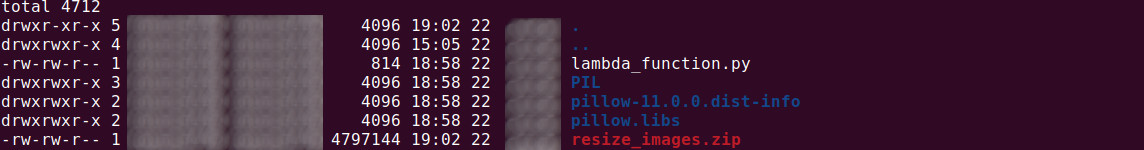

3. Download the Pillow library in the current directory using the following code

pip3 install pillow -t .

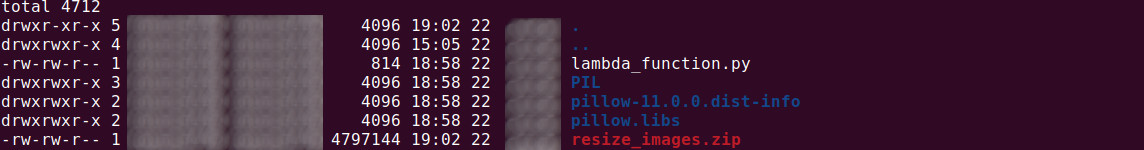

4. Zip the function and dependencies:

zip -r resize_images.zip .

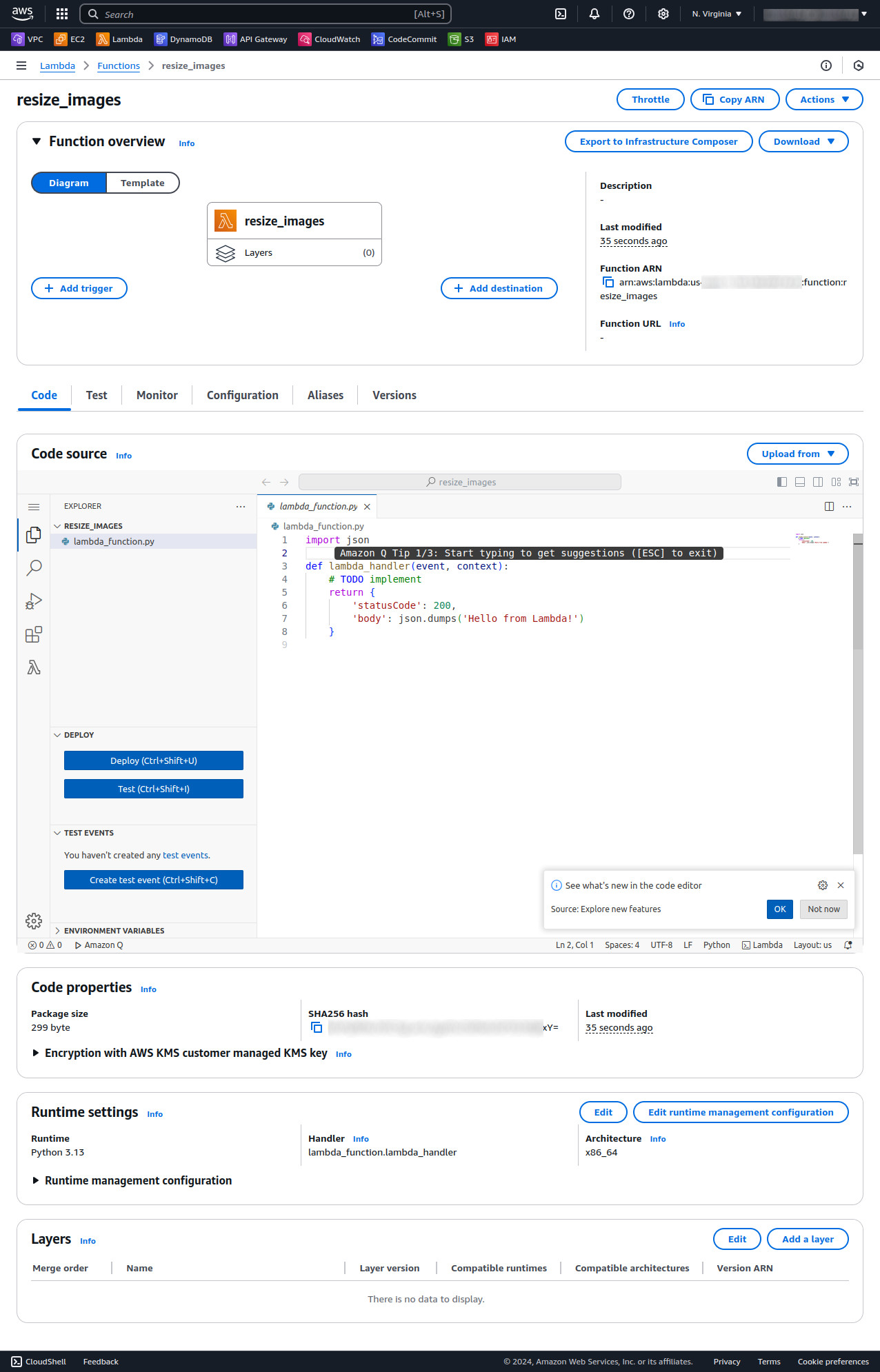

Create the Lambda Function

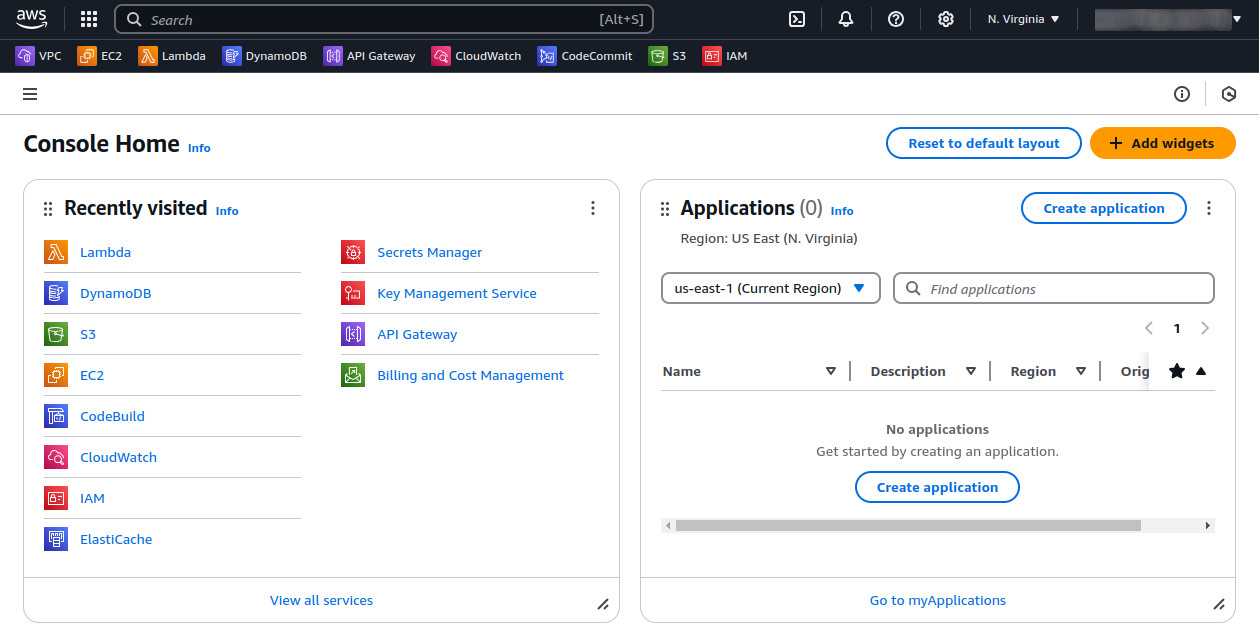

1. Login into AWS Console.

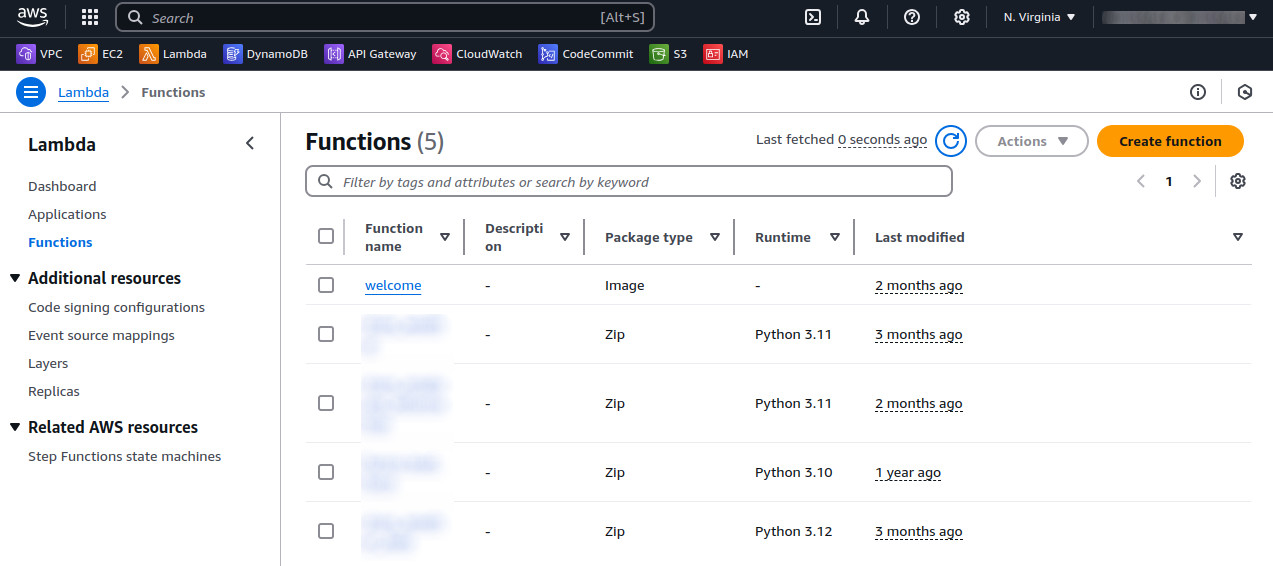

2. Navigate to the Lambda service in the AWS Management Console.

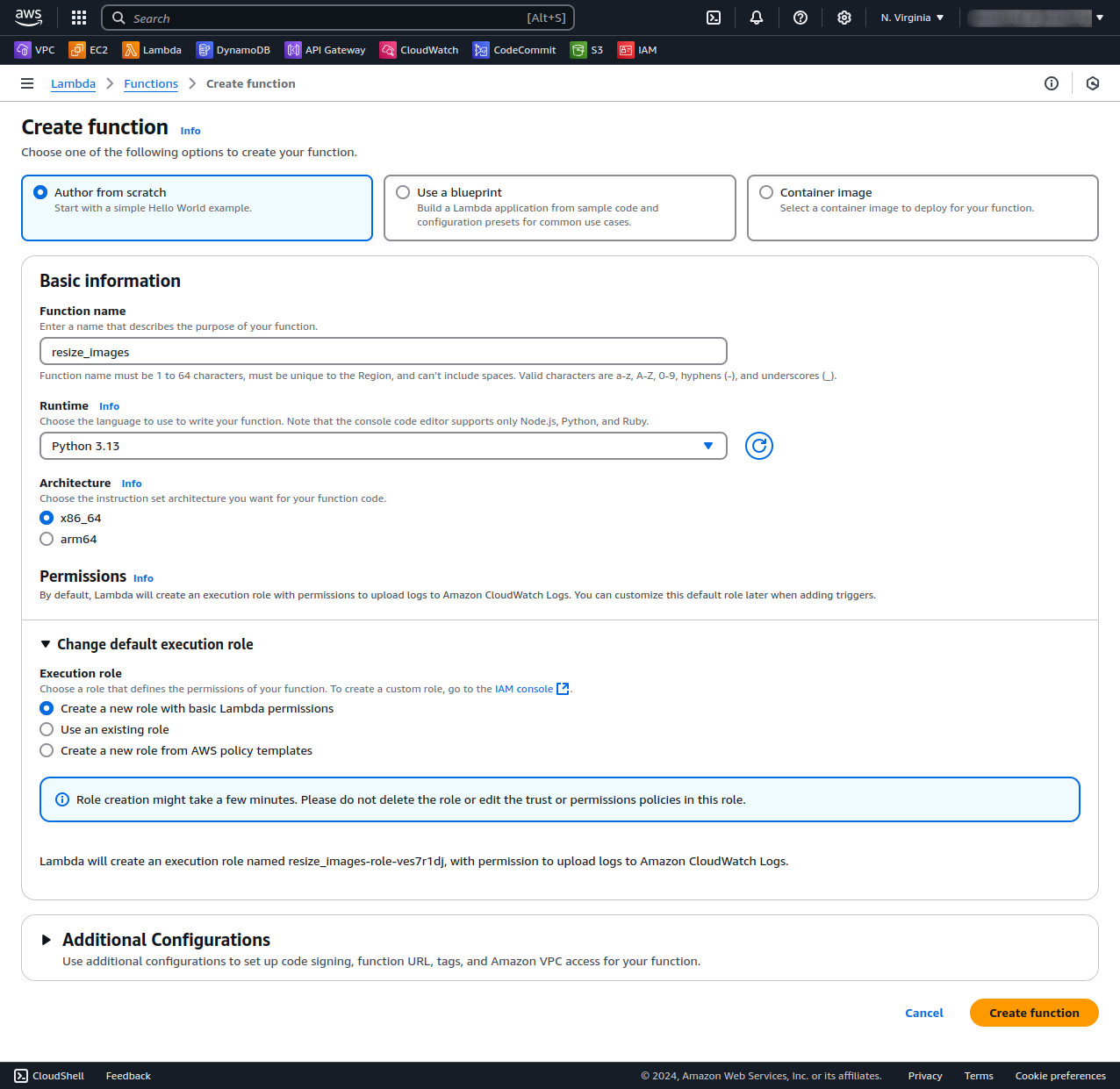

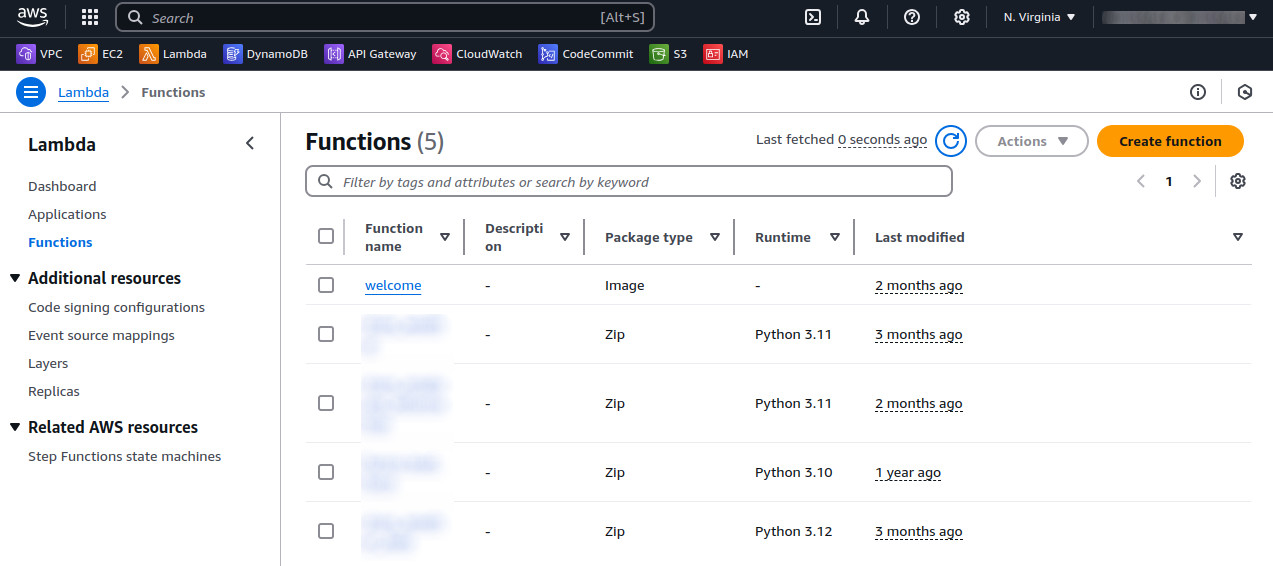

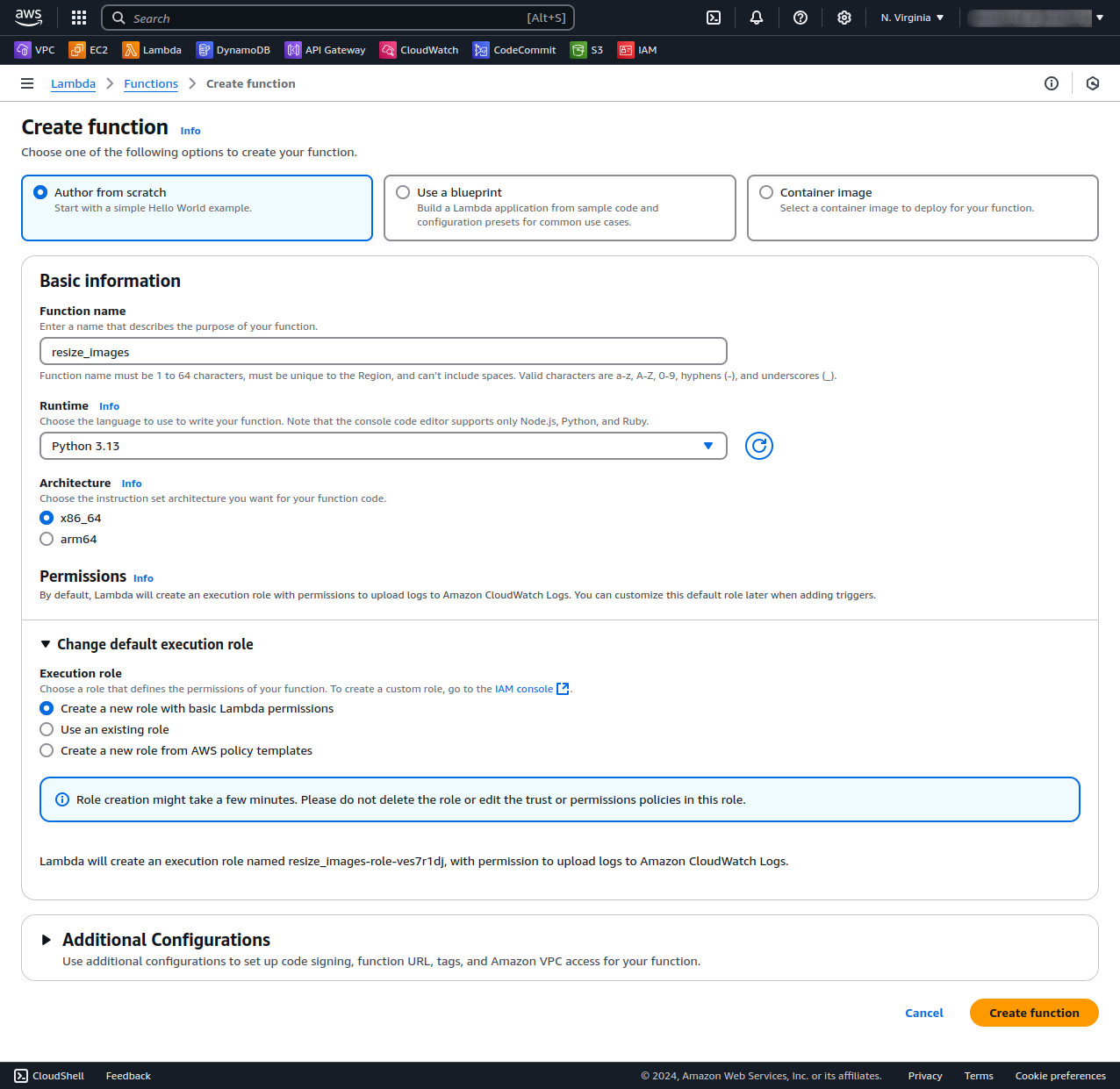

3. Click Create function and choose Author from scratch.

4. Enter "resize_images" as the function name.

Choose Python 3.x as the runtime.

Select "Create a new role with basic Lambda permissions" for permissions.

Click Create function.

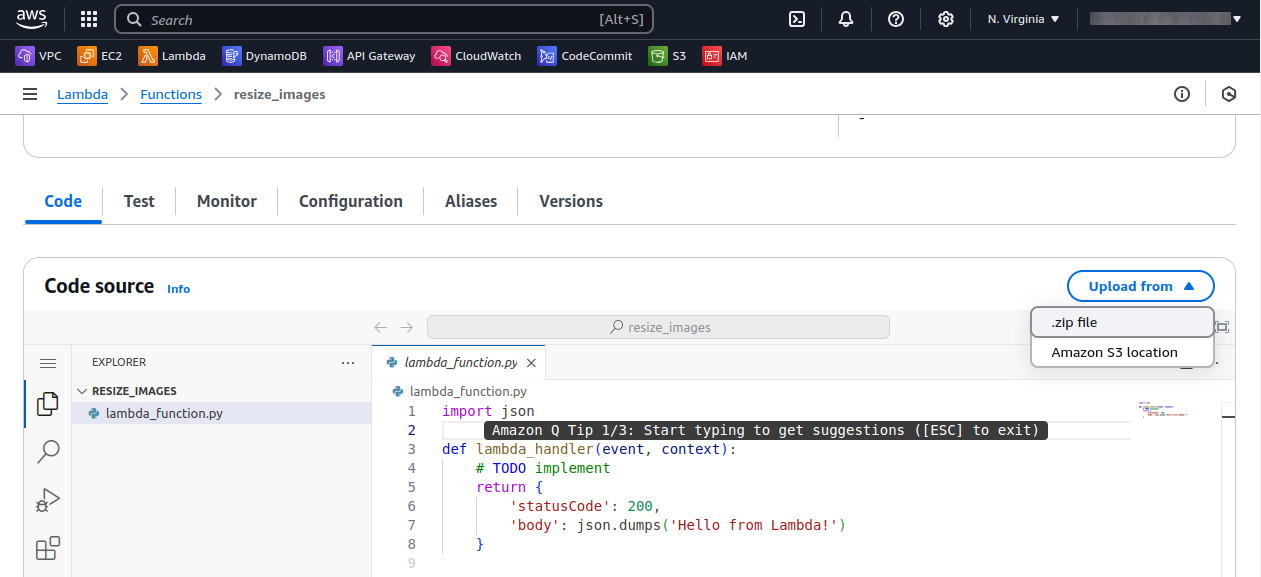

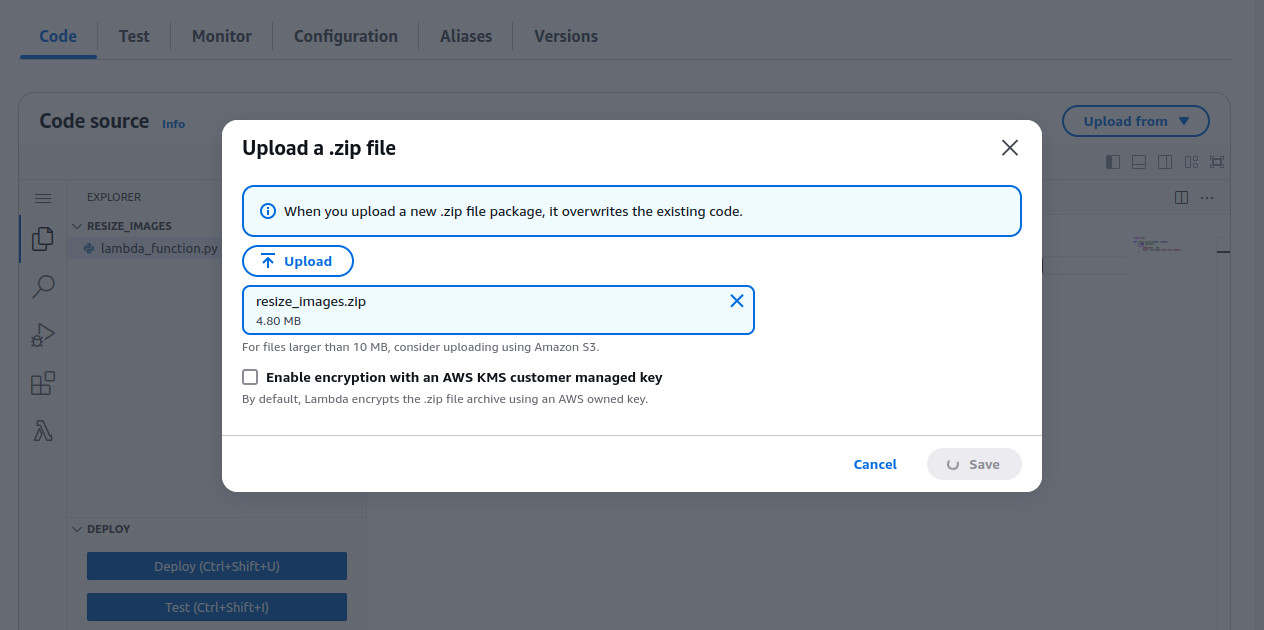

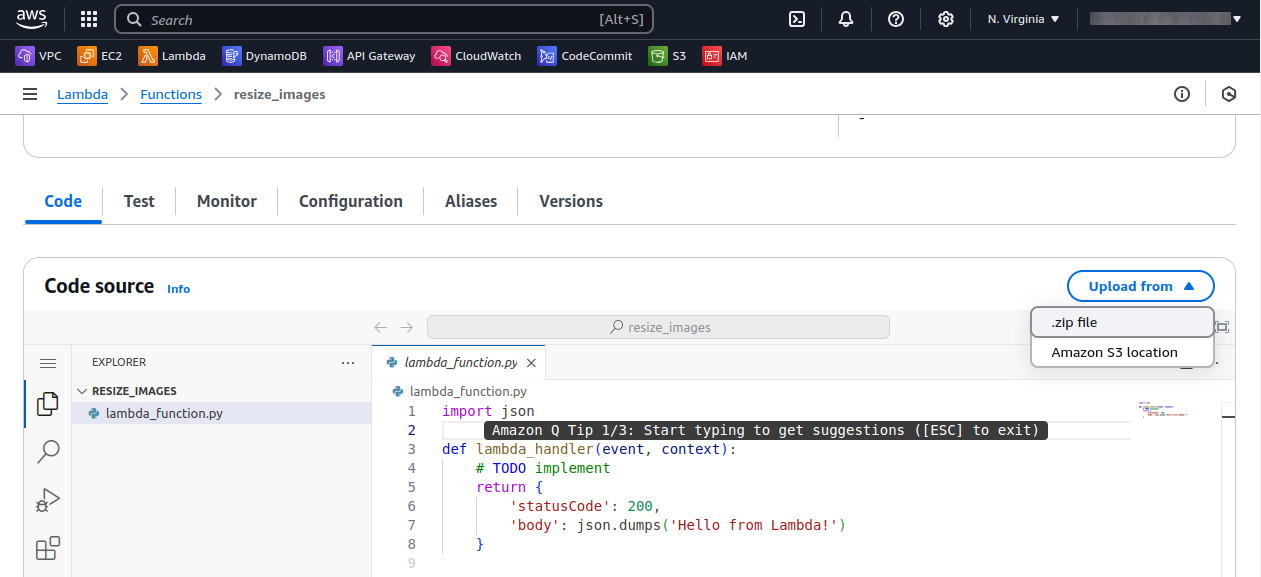

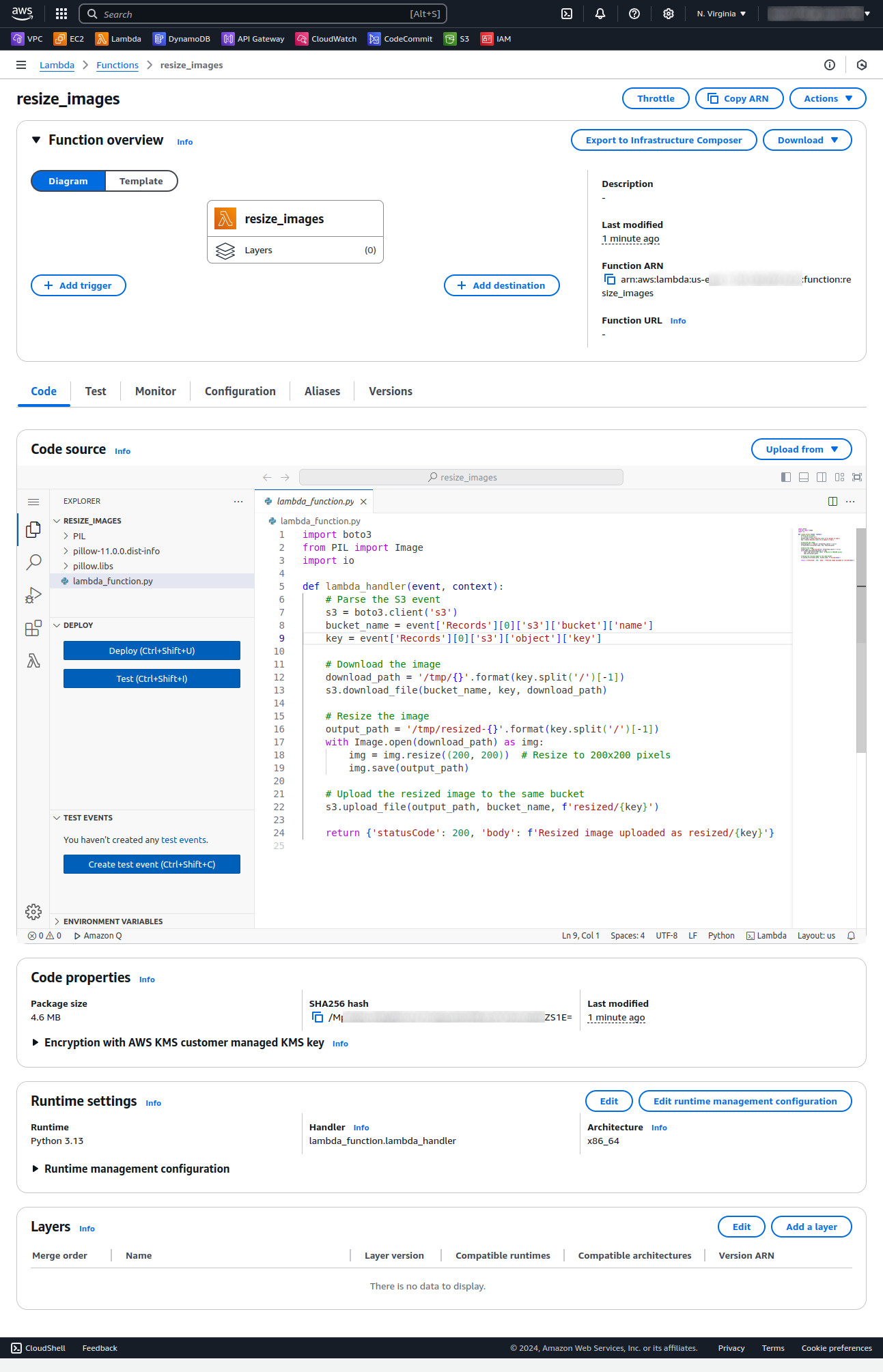

Upload the Zip Code

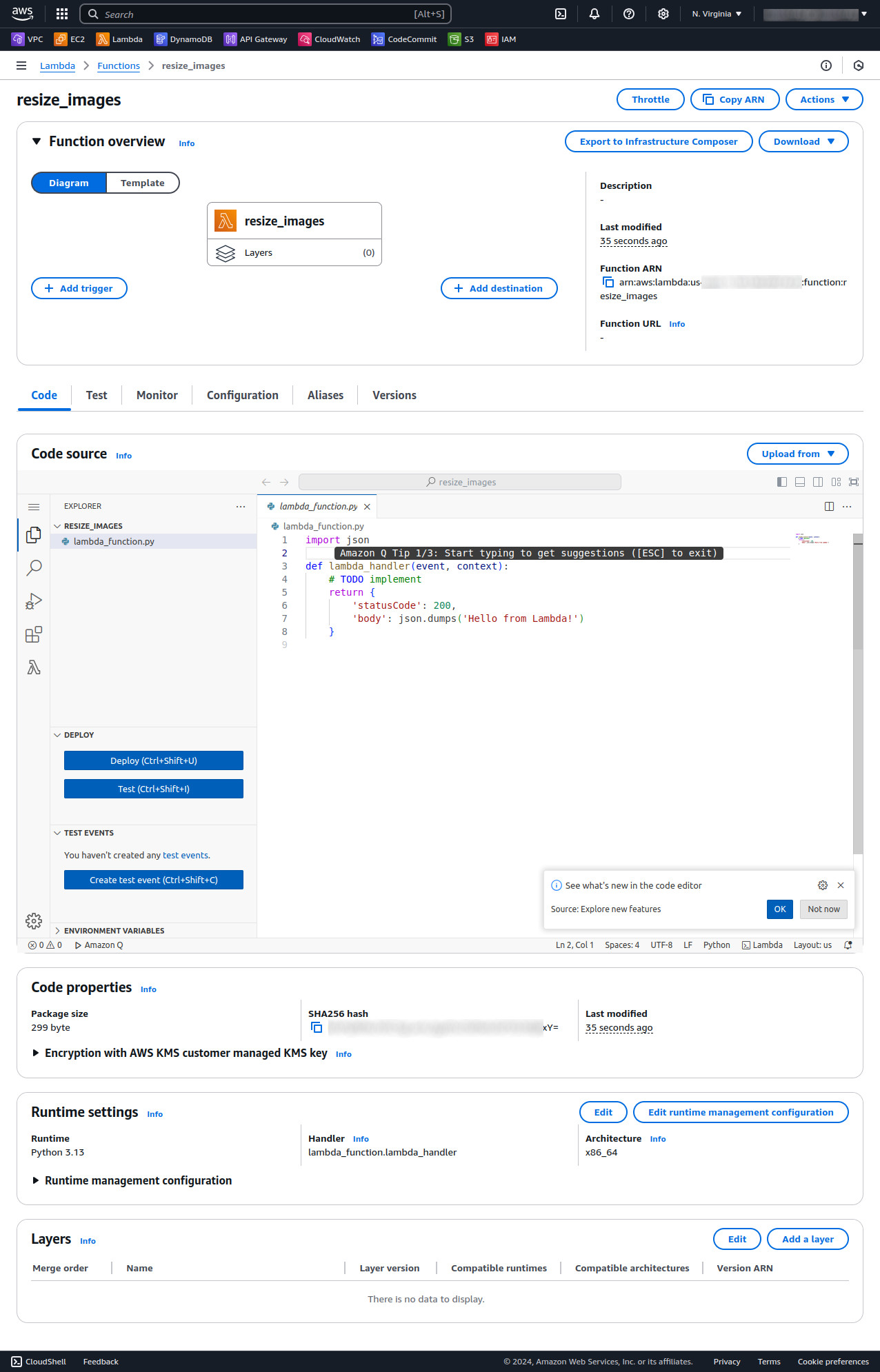

1. In the Lambda function console, go to the Code section.

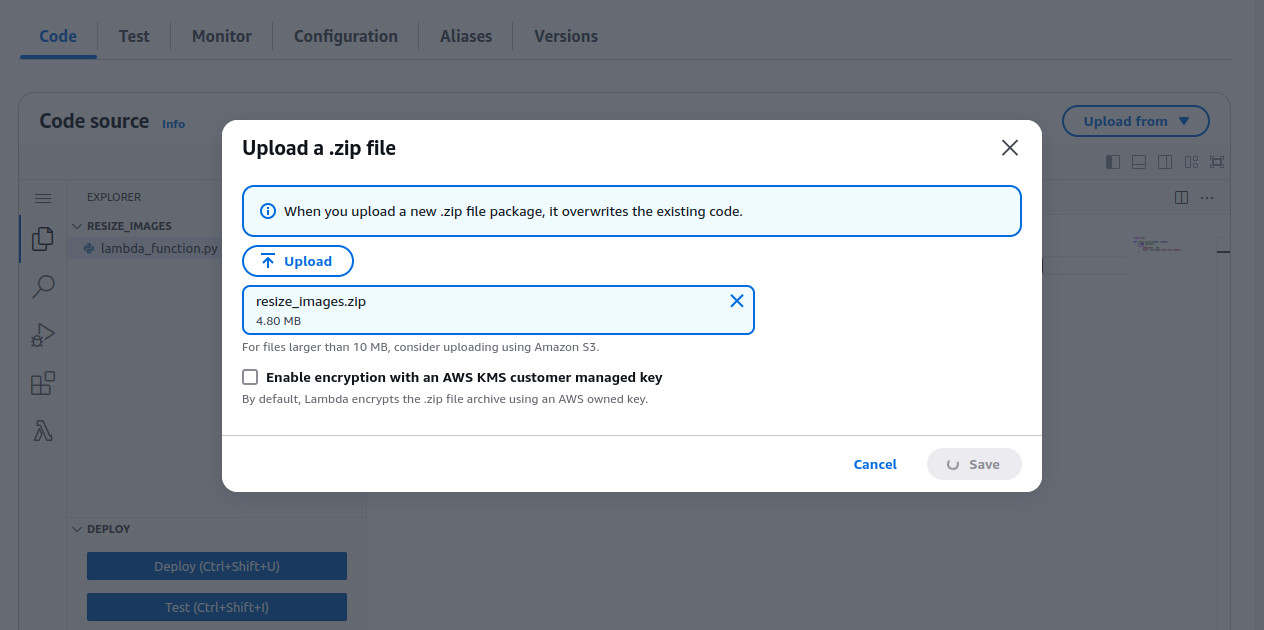

2. Click Upload from > .zip file and upload resize_images.zip.

3. Select the upload zip (we created earlier) and click on the Save button

4. Now refresh the page (if it doesn't update the code).

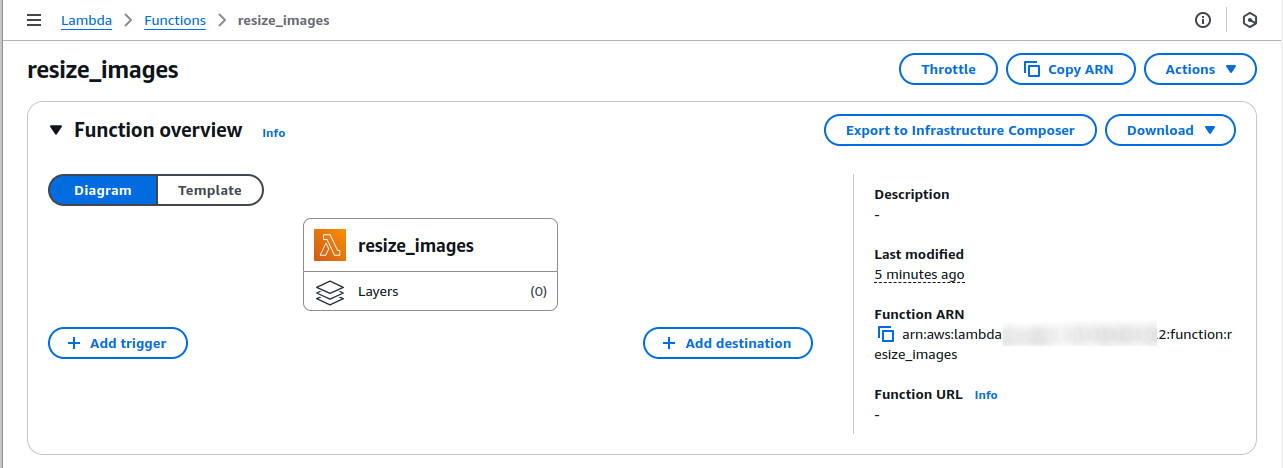

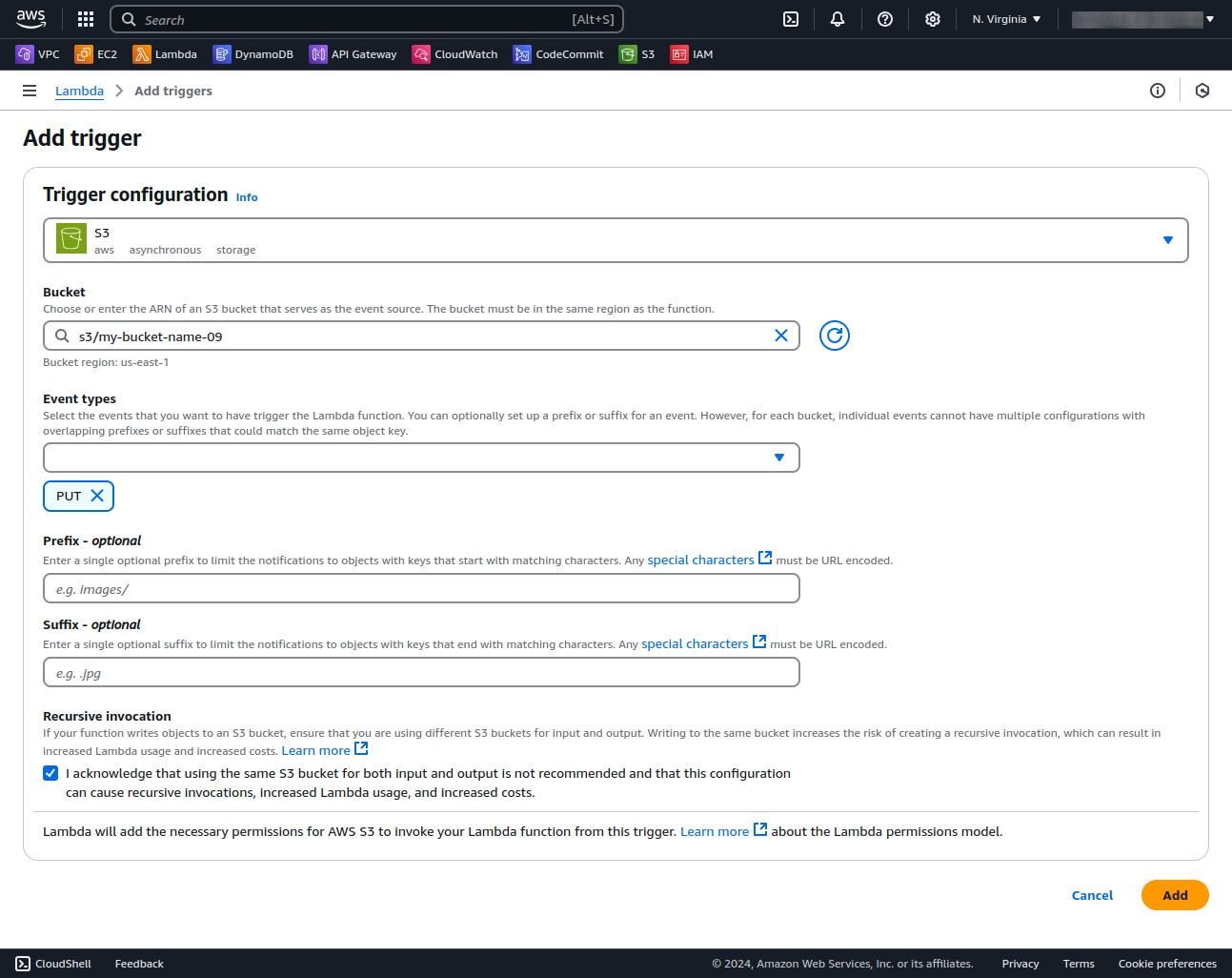

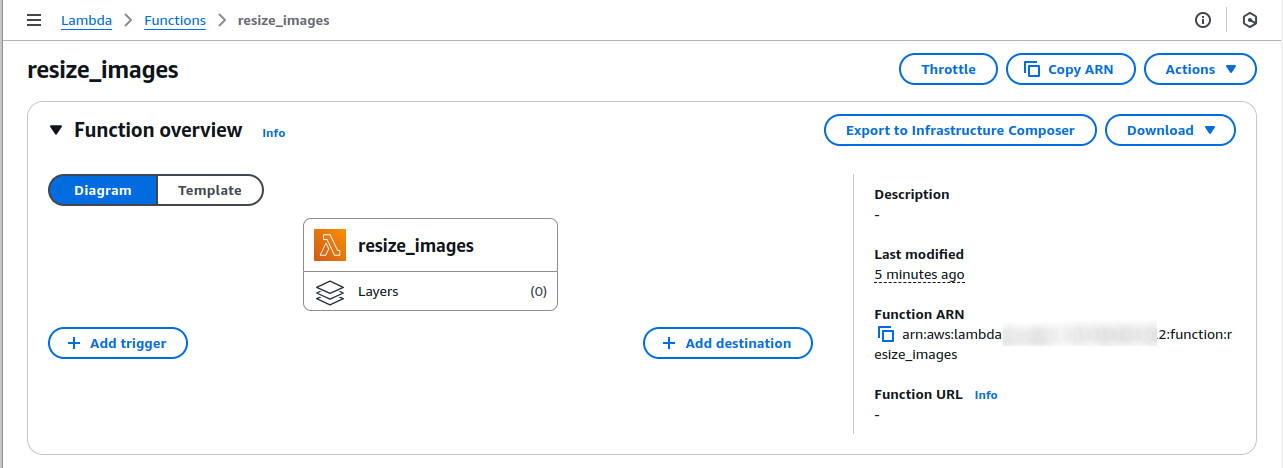

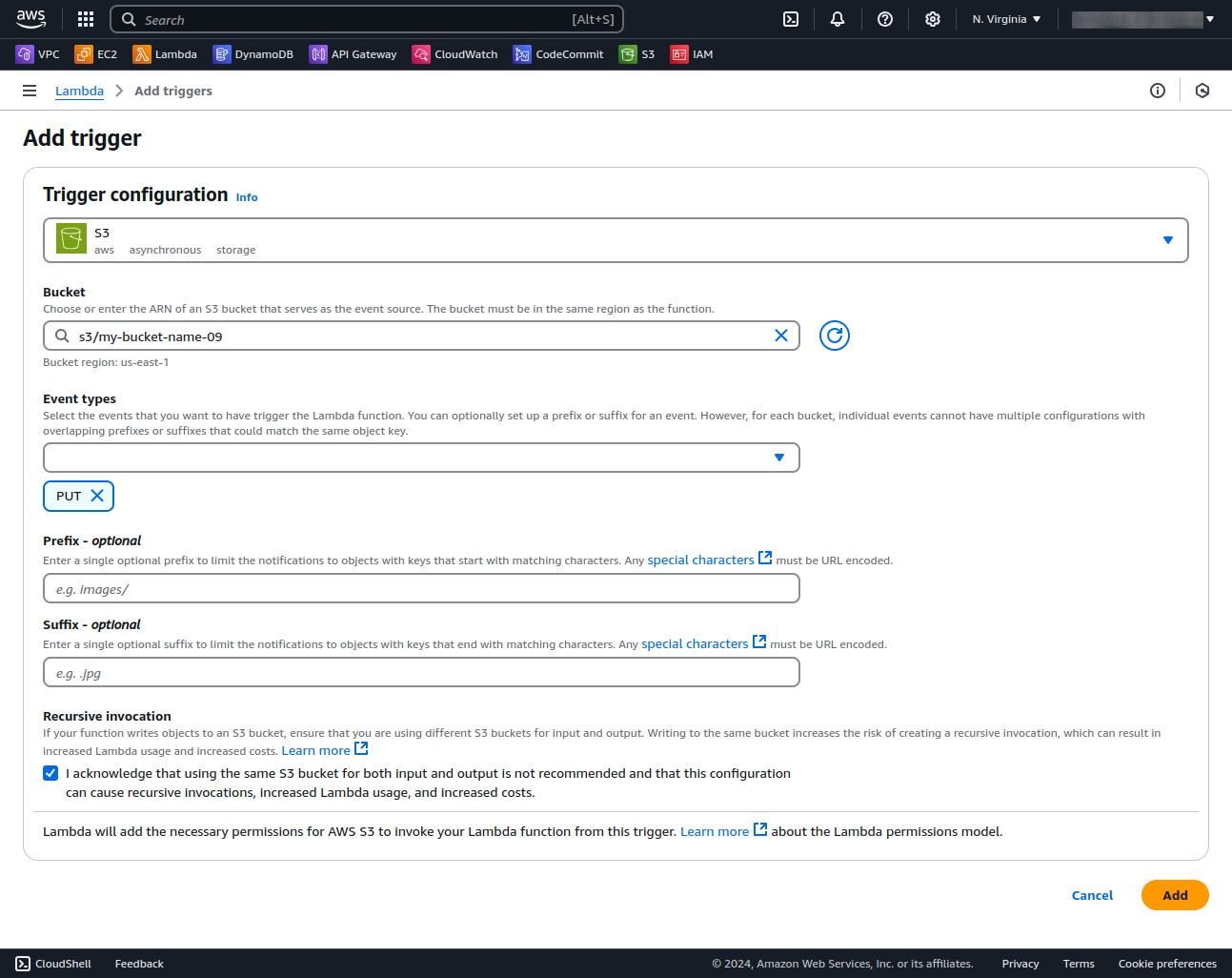

Configure the S3 Trigger

1. Scroll down to the Function overview section and click Add trigger.

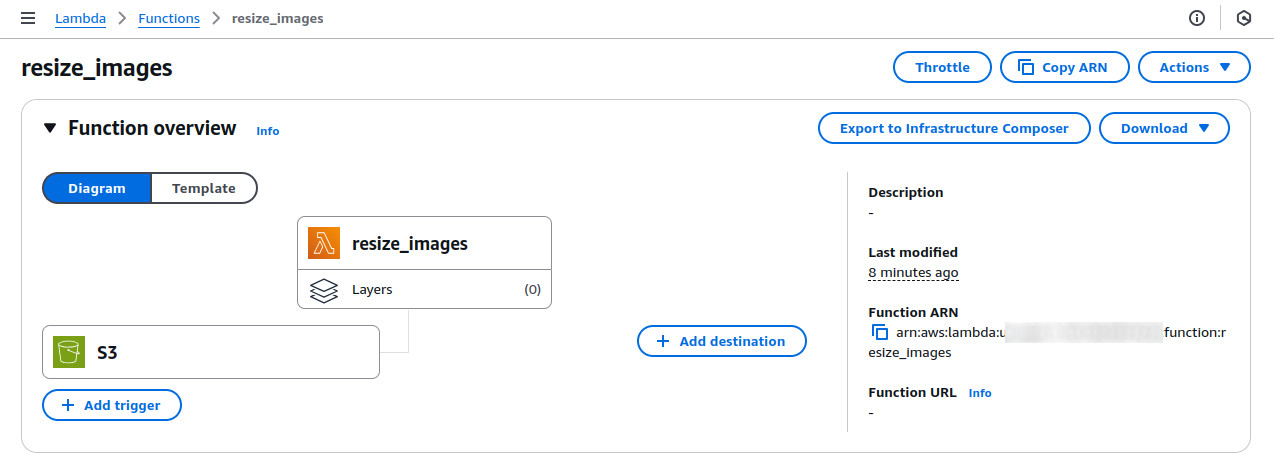

2. Choose S3 from the trigger options. Select the bucket name my-bucket-09. For the event type, select PUT (for uploads). Click Add button

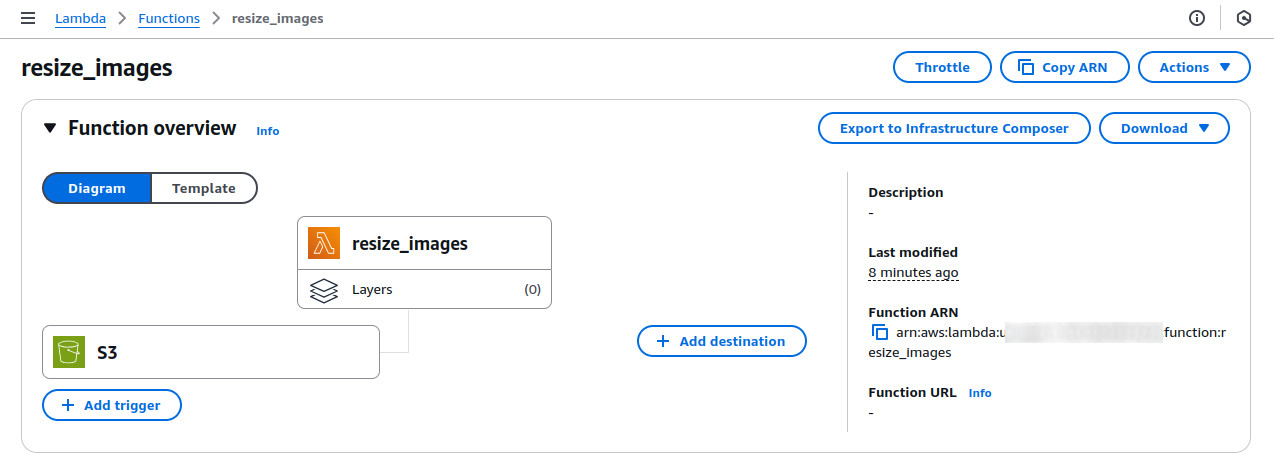

3. You will be redirected to Lambda function page. You will see the trigger added.

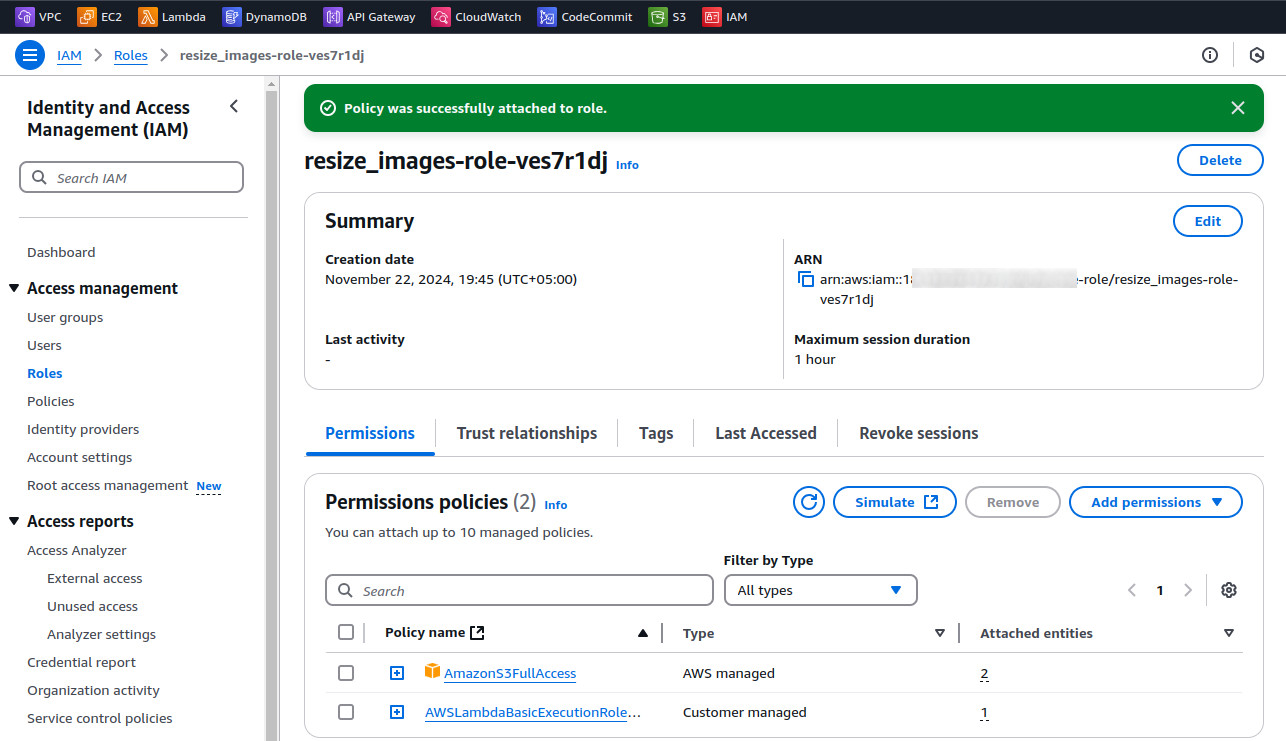

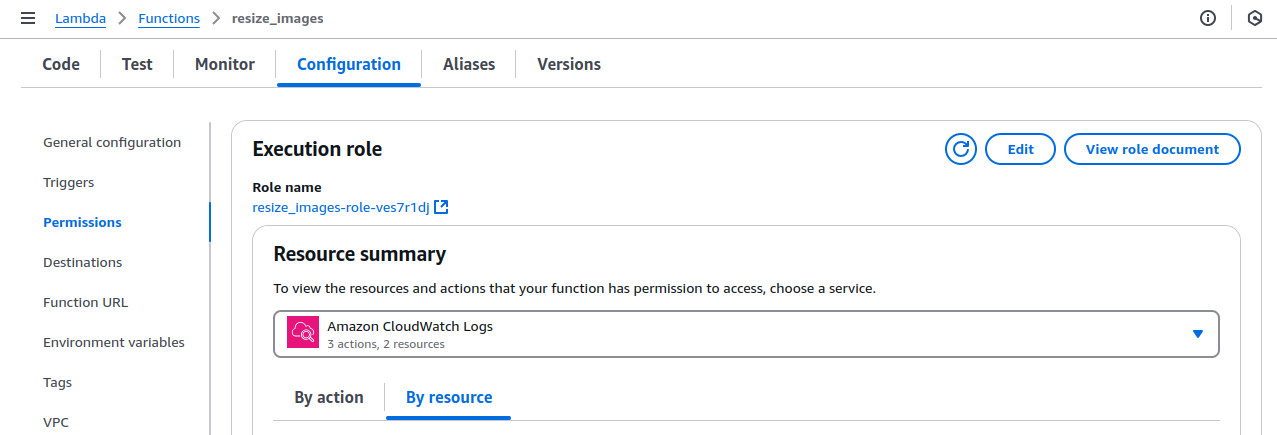

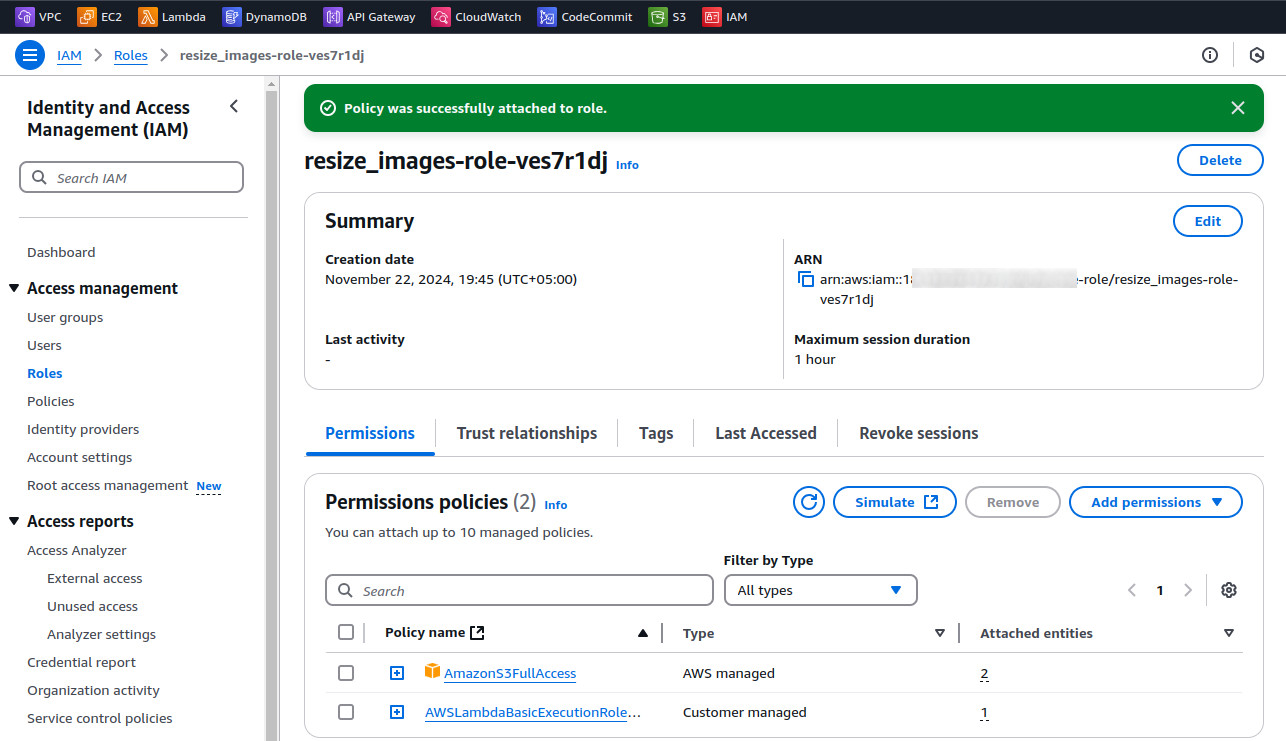

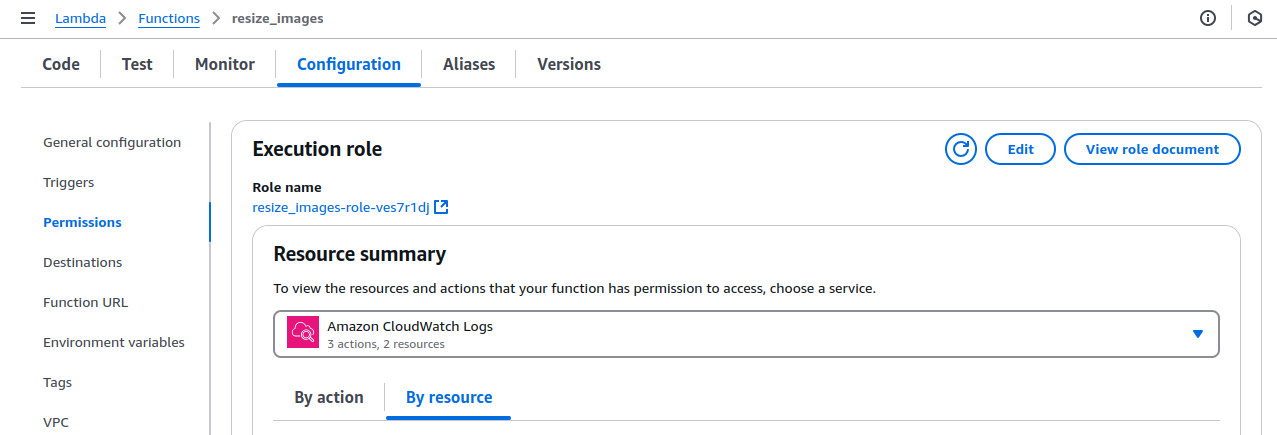

Adjust Bucket Permissions

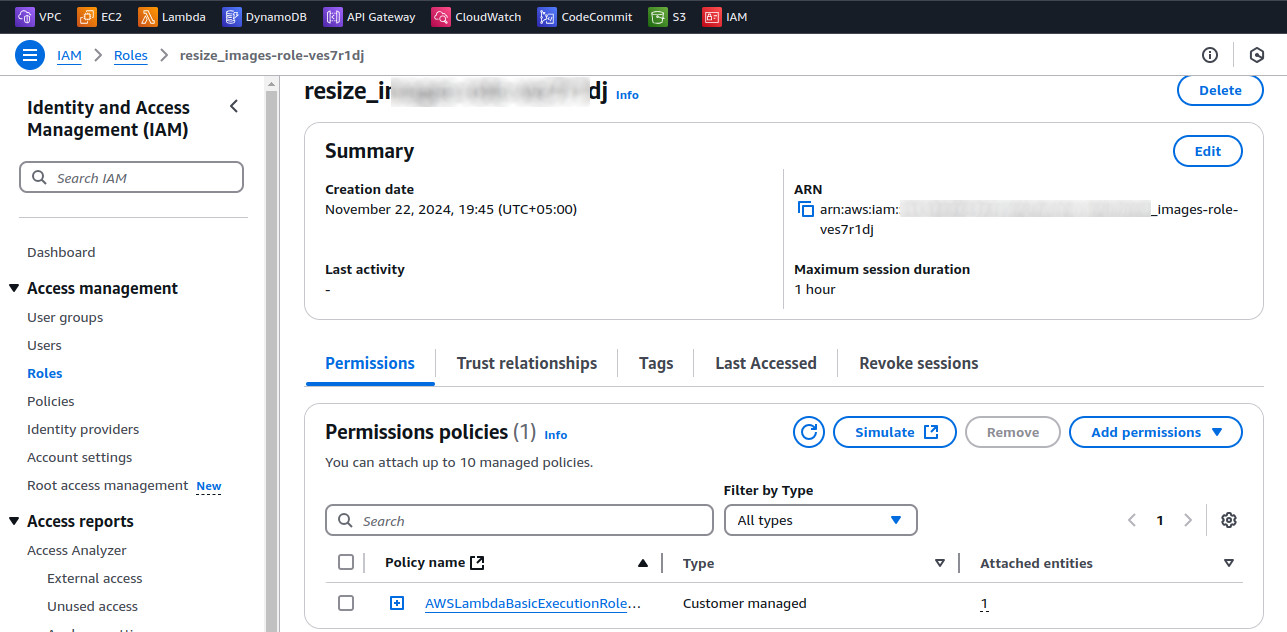

1. Go to the Permissions tab in the Lambda function console.

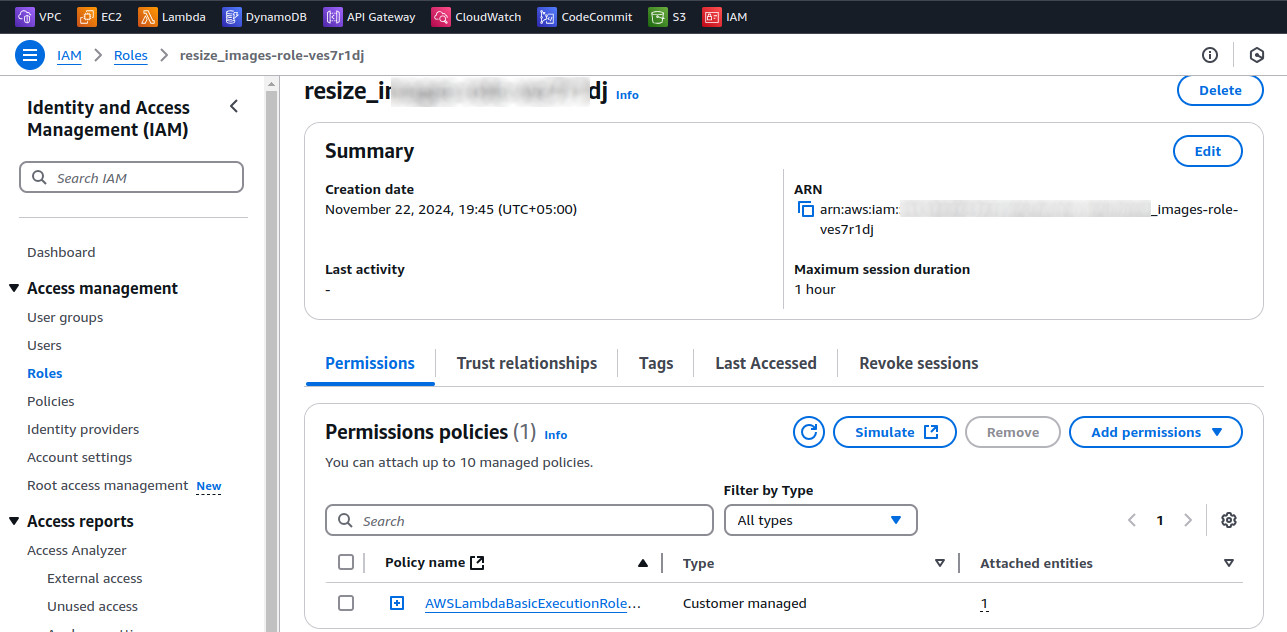

2. Click on the execution role (resize_images-role-ves7r1dj) and you will be redirect to IAM Console.

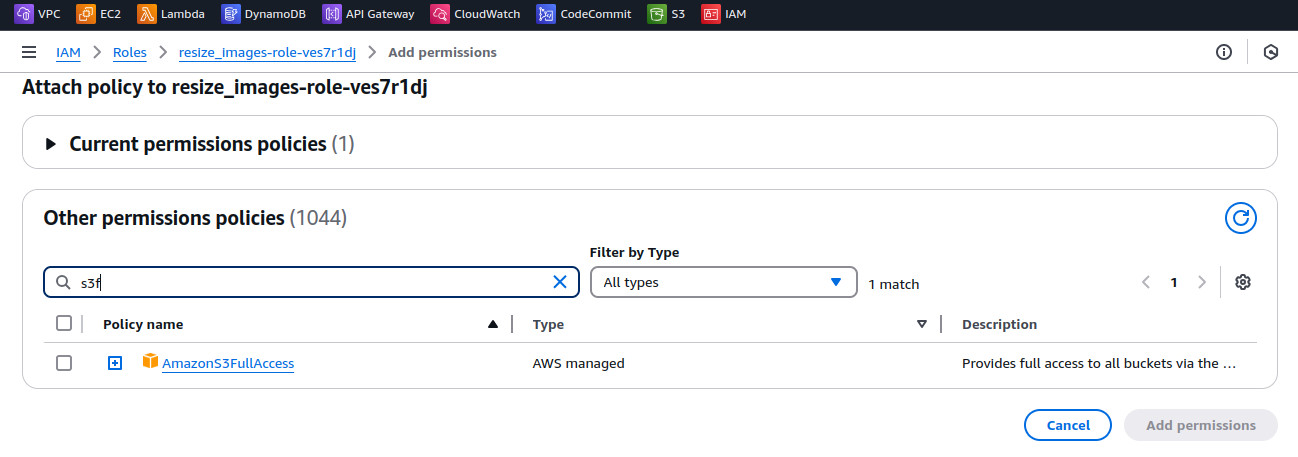

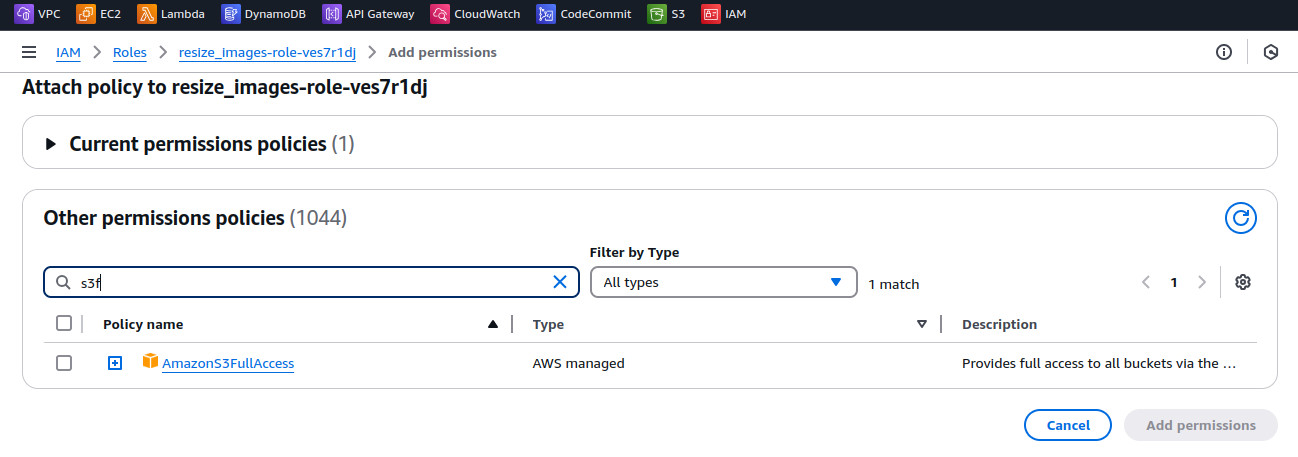

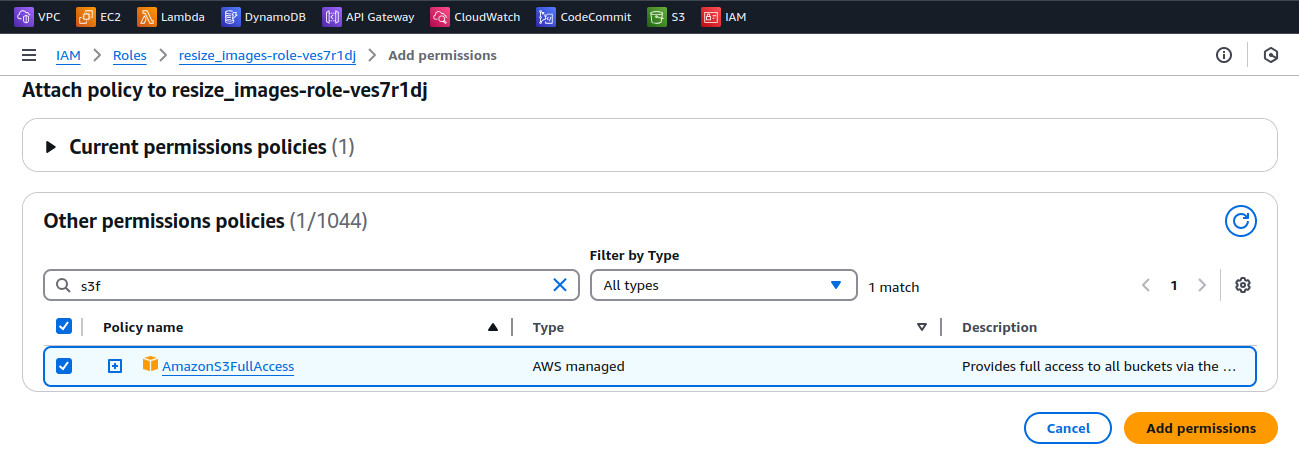

3. Attach the policy to allow S3 access:

Click Add permissions > Attach policies.

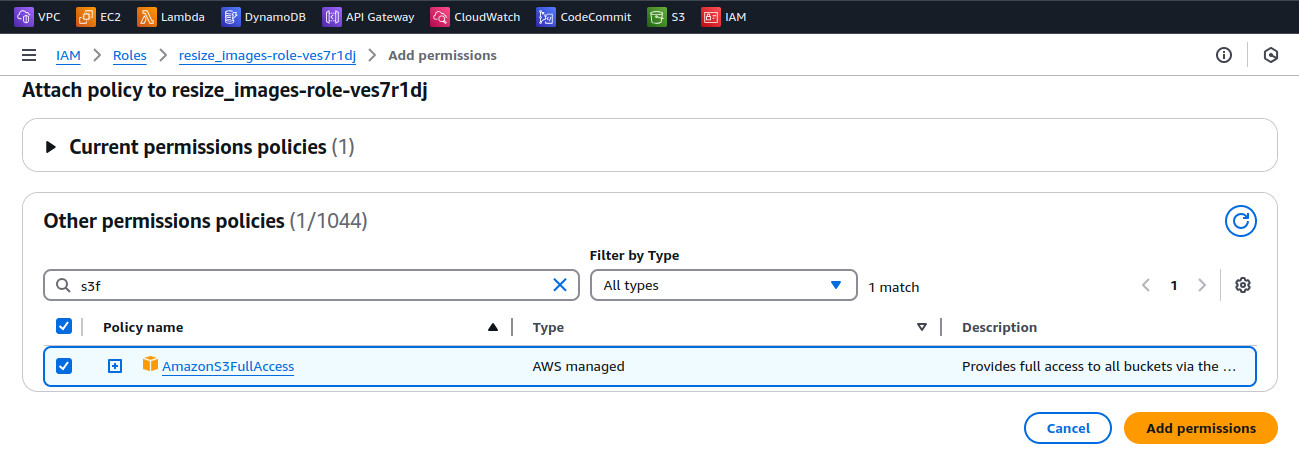

Search for and attach AmazonS3FullAccess (or a custom policy granting read/write permissions to my-bucket-09).

4. Click on add permissions button to continue